Artificial intelligence is a rapidly spreading tool at universities across the nation, presenting unique challenges for faculty and staff. From the history department to the School of Media & Communication in Jody Richards Hall, WKU faculty have differing opinions on AI.

Because of its growing use, many professors have had to incorporate policies into their syllabi regarding AI. Over the summer, WKU published a news release encouraging staff to begin thinking about how to address AI usage.

“While we will each approach AI differently in our classrooms, I urge you to be thoughtful and purposeful in your communication with students about the role AI might play in your courses,” Provost Robert “Bud” Fischer stated in the release. “If you have not already developed an AI policy for your syllabus, please consider adapting one of the suggested statements provided on the WKU Syllabus page that best matches your teaching philosophy and course goals.”

Kate Brown, an associate professor in the history department, had to add policies regarding AI to her syllabus for the fall 2023 semester.

Her policy states that the use of AI for any work within her classroom is prohibited, and if she suspects a student of using it, they have three options: receive a zero on the assignment, receive a zero on the assignment unless proven their work is original or receive a zero but are allowed to submit another assignment in its place for substitute credit.

“I was irritated,” Brown said. “But I wasn’t irritated because there was another policy to add, what irritated me is that Western Kentucky, I feel, gives no guidance. CITL [Center for Innovative Teaching and Learning], as far as I am aware, is only supporting how to use AI in the classroom and not how to help humanities professors like me who want to keep it out.”

However, over winter break, Fischer sent out an email to faculty and staff regarding work the faculty is doing to investigate how to use AI. Brown said she felt this is the university’s way of beginning to support professors who feel similarly to her, altering her feelings about the lack of direction.

“Since I had that initial feeling of Western leaving me high and dry, it seems like Western is being somewhat proactive,” Brown said. “Do I feel like I have an answer to my problem of it shouldn’t be used in humanities at all and how do I affect that? No, but Western is not as asleep at the wheel as I kind of originally felt when I put that statement in the syllabus.”

Brown emphasized that she knows WKU leadership is doing the best they can, knowing that AI is uncharted territory for most people.

“I just want to stress that I do know that Western is moving in directions to address AI,” Brown said. “But in the meantime, I still feel like they could do more. I just feel like I need to know, even if it’s not Western, can someone point me in a rational way to do a history class? Where you know AI isn’t a threat to my students learning how to think critically and write persuasively? I just don’t know the answer.”

Taylor Davis, professional in residence and instructor in the advertising program, instilled her AI policy when she started at WKU last semester. As someone who uses AI in her personal and work life, she recognized the need to adapt it in a way that works for her within her classroom.

Her syllabus policy highlights three main points: the bias in AI, ethical implications and an overreliance on AI. She said that she has not felt any pushback from the university in terms of her using AI within the classroom.

“You know, I think universities are still trying to figure out where to use it and how best to use it in our schools, and specifically in the School of Media & Communication,” Davis said.

Davis has already taken the step to “figure out” where to use it, incorporating it within her assignments. She encourages students to use it, but not overly rely on it, as stated in her syllabus policy. She says it is a digital tool available for her students.

The most commonly used form of AI in classrooms presents itself in ChatGPT, a chatbot that launched in November 2022. It enables users to ask it questions and receive a generated response based on a variety of sources. These sources can include news articles, scientific journals, Wikipedia and many others.

Within her classroom, Davis encourages students to use ChatGPT as a starting point. Students may put the assignment prompt into the AI software and review the generated responses. Students are meant to review the response they receive from the AI and see how it aligns with what they are learning in class, Davis said.

“I think the classroom setting with AI, learning how to use it as a tool, learning where and when it is appropriate to us. Those are all the things we have to figure out as well.” -Taylor Davis

Davis said a lot of her views on AI reflect her use of it in her personal and professional life. Being a professor is not her full-time job, as she also works in the corporate world as a marketing director for a healthcare company.

“When you’re part of a corporate marketing team, sometimes, you talk about the same thing over and over and over and over again about your brand,” Davis said. “So we’ll use it to brainstorm new ideas … A lot of time

it comes up with ideas that it thinks are creative, but are pretty corny, to be honest. So some are good, some are bad, but without that professional lens and experience and learning that we’ve all had, we may not know what is good or bad.”

This is the main reason she promotes the usage of AI. Learning the strategies, paying attention and being in class to gain that foundation is what allows students to use the tool effectively in their future careers, Davis said.

On top of this, she also uses it in her daily life. She used the example of uploading the different items in her freezers and cabinets in hopes of recipes being generated.

“It just does that calculation from the big data and makes things a little bit easier for you,” Davis said.

With technology growing and the uses of AI expanding, Davis recognizes that universities are having to adapt

to these changes. She believes that universities are going to have to learn how to incorporate it in the classroom setting, but it is ultimately not changing the way they function.

“Certainly, I do think that it will impact classrooms, and that’s me coming from a professional in residence perspective, versus maybe more of, I’ve just entered academia and there are professors who have been here much longer than me and have PhDs,” Davis said. “I think the classroom setting with AI, learning how to use it as a tool, learning where and when it is appropriate to use. Those are all things we have to figure out as well.”

However, some universities and faculty members have taken a much more head-on approach to adapt AI into their lessons.

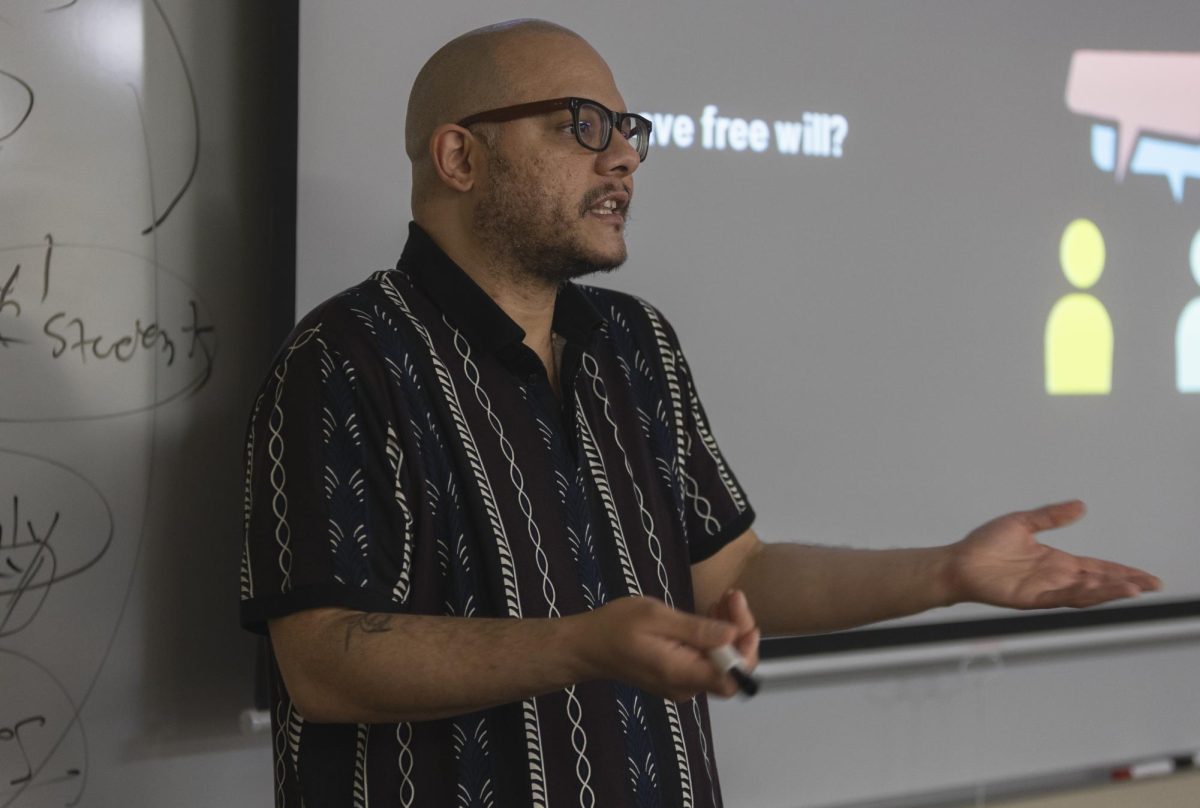

Marcus Brooks, assistant professor in the sociology and criminology department, elaborated on alternative universities that root their coursework in AI. He said the rise in these alternative universities began with a man named Jordan Peterson, psychology professor and Chancellor of Ralston College, a liberal arts college in Savannah, Georgia.

“He got famous a few years ago, like 2015, 2016, when in Kent – he’s Canadian – there was a bill about misgendering students, and he got famous because he’s like, ‘I refuse, I’m not going to use whatever fake pronouns you want to use,’” Brooks said. “So he got famous, and he kind of built a career on this.”

The bill in question is Bill C-16 and is an act towards including transgender and gender-diverse Canadians under human rights and hate crime laws. Peterson felt this bill was an effort to indoctrinate children and create oppressive spaces, Brooks said.

In turn, Peterson announced that he was creating an online university, now formally called Peterson Academy. This university is completely online with no professors. AI is incorporated into the lectures and all courses, including coursework, and is preprogrammed by Peterson and other professionals.

“Education, affordable to all, taught by the best,” the Peterson Academy website states. “Learn how to think, not what to think. Online university. Coming soon.”

While supporting the use of AI in moderation, Brooks does not think these alternative colleges will be accredited, let alone work out in the long run.

“I personally think they’re money-making schemes for the people who start them,” Brooks said. “But if you asked me, I would say it’s a lot of those same people who want to defund universities and offer these alternatives as AI alternatives, are the same ones who are going out saying colleges are too woke and indoctrinating students, and that’s just kind of the rhetoric they use.”

Though Brooks does not see alternative colleges becoming something more than an idea, he does think that AI is changing the way professors are having to teach.

Last December, he switched his assignments from primarily discussion board-based to multiple choice questions and true or false quizzes. By doing this, he discovered different AI Chrome extensions that produced the answers to the questions asked in these assignments.

“Because, again, even in that example, I was like, okay, well, they can copy and paste it and ChatGPT will give them a paragraph, but they can’t put a multiple choice in ChatGPT,” Brooks said. “But now, apparently they can. So, I’m not sure what that looks like long term. But I think it’s definitely something that we need to be thinking about.”

Despite Brooks’ mixed feelings on AI and its uses within the classroom, he recognizes that sometimes, students feel it is their last choice.

“I know, I was an undergrad in college too, obviously, sometimes life is happening, and we just need to get that assignment done,” Brooks said. “But the thing is, when I’m in the classroom, and I’m getting to know my students, and I really tried to share with them is like, these four years, this is a unique time in your lives, and take advantage of it. Like, when else in your life [do] you have four years – I know a lot of you have jobs and responsibilities – but for a lot of you, one of your main jobs is just to learn, and when else do you have that opportunity?”

News Reporter Shayla Abney can be reached at [email protected]